LangWatch

Overview

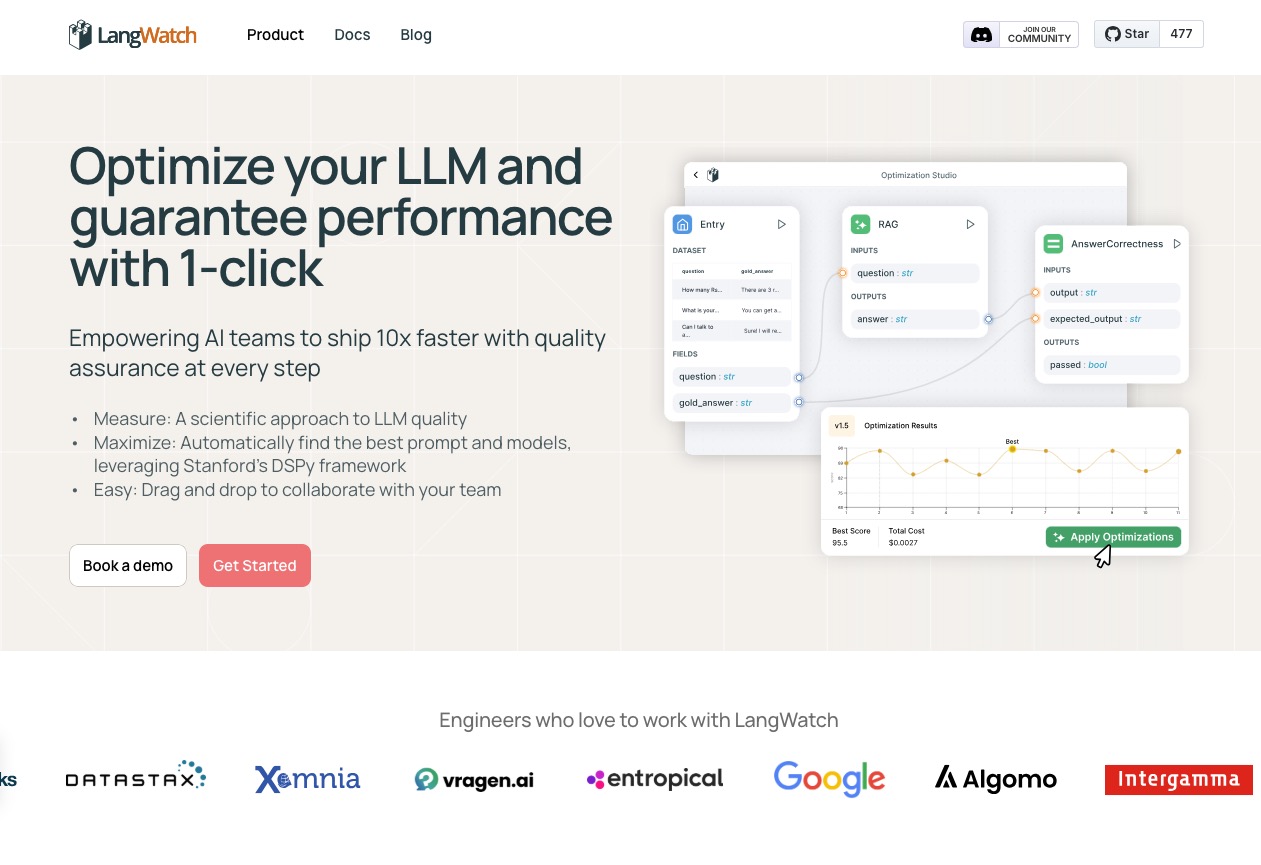

An LLM Optimization Studio that empowers AI teams to ship faster with quality assurance at every step, offering tools for monitoring, evaluating, and optimizing large language model applications.

LangWatch is a comprehensive platform designed to assist AI teams in developing and deploying large language model (LLM) applications efficiently and reliably. It provides a suite of tools for monitoring, evaluating, and optimizing LLM workflows, ensuring quality and performance throughout the development process. With features like the Optimization Studio, which offers a visual interface for creating and refining LLM pipelines, and integration with frameworks such as Stanford's DSPy, LangWatch enables users to automate prompt optimization and evaluate model outputs effectively. The platform supports various LLMs, including OpenAI, Claude, Azure, Gemini, and Hugging Face, and offers easy integration into existing tech stacks, making it a versatile solution for AI development and deployment.

Autonomy level

47%

Comparisons

Custom Comparisons

Some of the use cases of LangWatch:

- Monitoring and evaluating the performance of LLM applications.

- Automating prompt optimization to enhance model outputs.

- Ensuring quality assurance in AI development workflows.

- Integrating with various LLM providers and existing tech stacks.

Loading Community Opinions...